Low latency streaming vs streaming quality is a constant challenge for OTT platforms today. While viewers expect real-time playback with minimal delay, even a slight drop in video quality can lead to buffering, frustration, and churn.

However, many platforms that successfully achieve low latency continue to face high viewer drop-off, shortened watch times, and declining engagement. This disconnect highlights a critical misunderstanding: latency alone does not define streaming quality or viewer experience.

To understand why viewers still leave, we need to look at how real users experience streaming systems beyond a single performance metric.

Understanding Viewer Drop-Off in Streaming Platforms

Viewer drop-off refers to users abandoning a stream before completing a meaningful viewing session. For both live and on-demand platforms, this directly affects monetization, ad delivery, subscriber retention, and perceived platform reliability.

While latency determines how close playback is to real time, most viewer abandonment is driven by issues that occur before or during playback. These issues erode trust quickly, even when latency metrics look impressive on internal dashboards.

What Low Latency Streaming Actually Improves

Low latency streaming is essential for use cases where timing accuracy matters, such as live sports, real-time interactions, auctions, and synchronized events. In these scenarios, delay directly impacts fairness, engagement, or participation.

What low latency does not automatically improve is the overall Quality of Experience (QoE). Many platforms reduce buffer sizes and segment durations to lower latency, which can introduce new points of failure if the rest of the streaming architecture is not designed to support those tighter tolerances.

As a result, platforms often improve speed while unintentionally sacrificing stability.

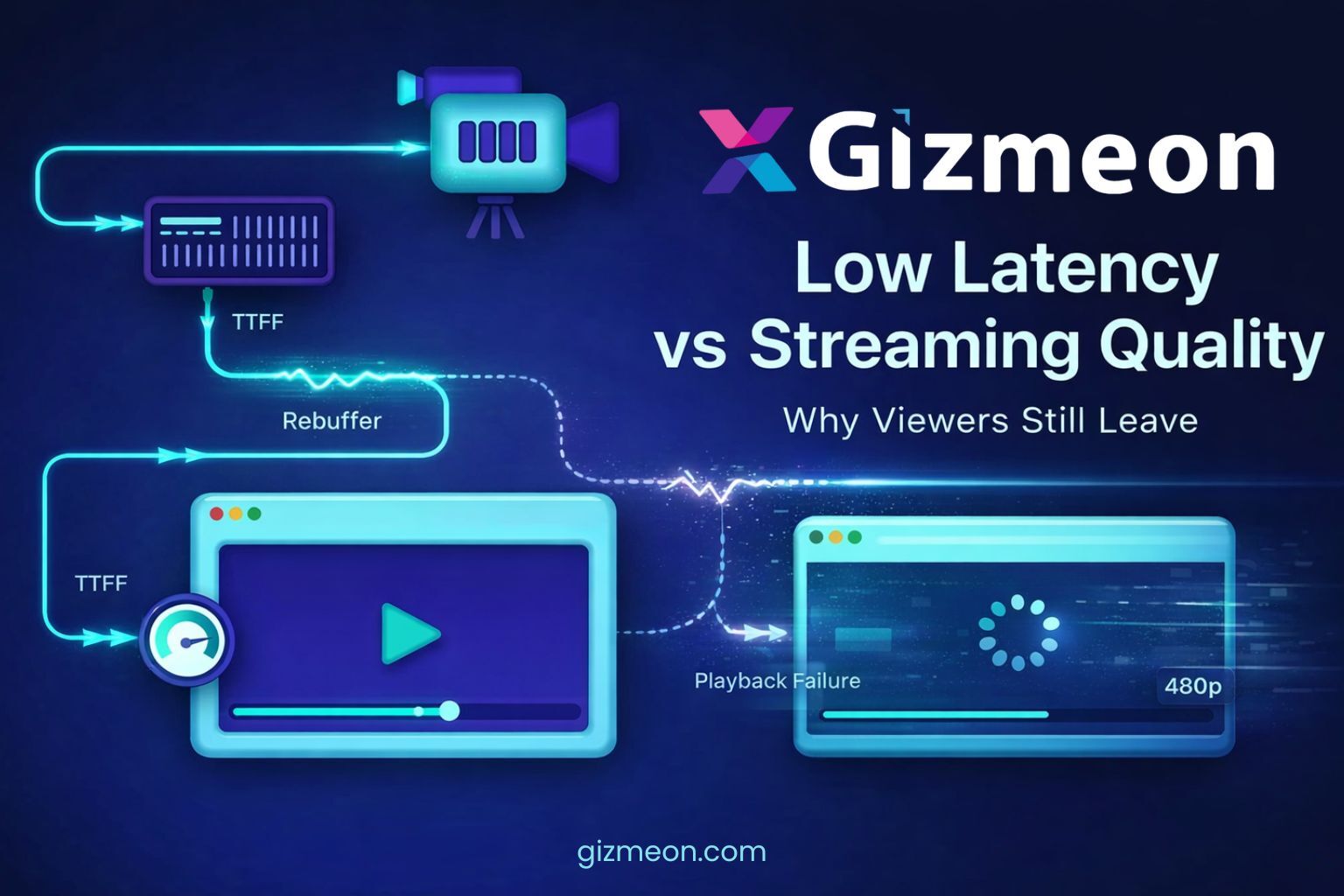

Startup Time Is the First Real Retention Test

For viewers, the experience begins the moment they press play. Time to First Frame (TTFF)—the delay before the first frame appears—is one of the strongest predictors of whether a viewer will stay or leave.

Startup time is influenced by multiple components working together: player initialization, DRM authentication, manifest delivery, initial segment downloads, and adaptive bitrate selection. Any delay in this chain increases the likelihood of abandonment.

Low latency configurations typically operate with smaller buffers, which can make startup more sensitive to network variability and CDN performance. In many cases, improving TTFF has a far greater impact on viewer retention than further reducing live latency.

Buffering Remains the Fastest Way to Lose Viewers

Buffering and rebuffering continue to be among the most disruptive streaming issues. Even brief interruptions can cause viewers to abandon a stream, particularly when buffering occurs unpredictably.

Low latency streaming relies on short segments and minimal buffering, which increases exposure to bandwidth fluctuations, packet loss, and regional CDN inconsistencies. When adaptive bitrate streaming is not carefully tuned, this results in visible quality oscillation and repeated playback stalls.

From the viewer’s perspective, the stream feels unreliable—regardless of how close it is to real time.

FAST Channels Expose the Limits of Low-Latency Thinking

FAST (Free Ad-Supported Television) channels amplify many of the challenges discussed above by introducing always-on, linear streaming experiences where consistency and reliability matter as much as speed. Unlike on-demand OTT viewing, FAST channels rely on predictable scheduling, uninterrupted playback, and stable ad delivery to sustain both viewer engagement and monetization. Even brief buffering events, playback failures, or quality drops can disrupt the viewing flow and directly impact ad impressions and revenue.

To operate FAST channels successfully, platforms must prioritize more than just low latency.

They need to optimize for:

- Fast and reliable startup performance to avoid early viewer drop-off

- Playback resilience that can recover gracefully from network fluctuations

- Consistent video quality across devices and screen types

- Stable ad delivery to protect impressions and revenue

Gizmeon supports these requirements by enabling content owners to launch and manage FAST channels with linear scheduling, server-side ad insertion (SSAI), multi-device distribution, and performance analytics. This allows platforms to scale ad-supported streaming efficiently while maintaining the reliability and viewing consistency that FAST audiences and advertisers expect, reinforcing that in FAST environments, latency alone is not a sufficient indicator of quality of experience or long-term platform success.

Device Fragmentation Complicates Streaming Quality

Streaming platforms must support a wide range of devices, including smart TVs, mobile phones, set-top boxes, and browsers with varying media capabilities. Each device handles decoding, memory management, and network conditions differently.

Low latency streaming places additional pressure on these devices by requiring faster segment processing and tighter synchronization. On lower-powered or older hardware, this often leads to dropped frames, playback freezes, or silent player failures.

Many of these failures never appear in high-level analytics but directly contribute to viewer drop-off.

Playback Failures Outweigh Latency Improvements

From a retention standpoint, reliability matters more than speed. Viewers are far more likely to abandon a platform after a playback failure than after experiencing a few seconds of delay.

Common failure scenarios include:

- Streams failing to resume after network interruptions

- Video freezing during bitrate changes

- Audio continuing while video stalls

- Player crashes with no recovery path

Low latency systems provide less margin for recovery because buffer depth is minimal. When something goes wrong, the system has fewer options to recover gracefully.

The Trade-Off Between Low Latency Streaming and Reliability

Every reduction in latency tightens tolerances across the video delivery pipeline. Segment alignment becomes more critical, clock drift becomes visible, and error recovery windows shrink.

Without strong observability, intelligent fallback mechanisms, and region-aware CDN routing, low latency streaming architectures can become fragile. Fragile systems may perform well under ideal conditions but fail under real-world network variability—exactly when reliability matters most.

In practice, platforms using solutions from Gizmeon often focus on observability, fallback logic, and playback resilience first before pushing latency boundaries.

Metrics That Actually Predict Viewer Retention

Latency is an easy metric to track, but it is not the most meaningful indicator of viewer satisfaction. Metrics that correlate more closely with retention reflect actual user experience, including:

- Time to First Frame (TTFF)

- Rebuffering ratio

- Playback failure rate

- Quality stability over time

- Session completion rate

These Quality of Experience (QoE) metrics reveal where streaming systems succeed or fail from the viewer’s perspective.

When Low Latency Streaming Truly Adds Value

Low latency is essential when real-time interaction affects outcomes or when synchronization with live events is critical. In these cases, latency reduction must be paired with adaptive buffering strategies, resilient player logic, and continuous monitoring.

Without those supporting systems, low latency alone can increase operational risk rather than improve viewer engagement.

Designing Streaming Platforms for Real Users

Viewer drop-off is rarely caused by a single technical issue. It is the cumulative effect of slow startup, buffering, playback failures, device incompatibility, and poor recovery behavior.

Low latency streaming should be treated as one component of a broader streaming performance strategy—not the end goal. Platforms that optimize for stability, predictability, and real-world viewing conditions are far more likely to retain viewers over time. From our work at Gizmeon, this is often where streaming platforms see the biggest gains in retention and monetization.

Without the right infrastructure, low latency streaming alone cannot guarantee viewer retention or playback satisfaction. The real question for streaming platforms is not how low latency can go, but how reliable the experience remains at that latency.

When reliability and quality come first, latency becomes a strategic choice—not a vanity metric.